Or scp VS tar+ssh VS rsync+ssh VS tar+netcat

Or scp VS tar+ssh VS rsync+ssh VS tar+netcat

In a previous article in which i’ve show some uses of tar, I made an example of how to use it to move large amounts of data between two computers, but many people have said that it is better, or at least they prefer to use rsync, others prefer to use netcat. I remain convinced that a tar+ssh is faster than rsync+ssh is correct then do a test on the field and see some numbers.

For the test i’ll use 2 computer connected on my home network, both are connected with wi-fi to my router, i think the bottleneck will be (for the ssh test) the cpu of laptop1 (centrino 1.5 GHZ).

So we have:

laptop1: centrino 1500 — ip 192.168.0.10

laptop2: Intel Core2 Duo CPU 2.20GHz — ip 192.168.0.2

Bandwidth test

To get a first idea of what I could get the i measured the bandwidth between the two PCs with iperf, I did 10 run and got the aveaverage result that seem more realistic than a single shot.

On the client i run:

~ # iperf -c 192.168.0.2 |

On the server:

~ # iperf -s ------------------------------------------------------------ Server listening on TCP port 5001 TCP window size: 85.3 KByte (default) ------------------------------------------------------------ [ 4] local 192.168.0.2 port 5001 connected with 192.168.0.10 port 40530 [ ID] Interval Transfer Bandwidth [ 4] 0.0-12.1 sec 3.47 MBytes 2.40 Mbits/sec [ 4] local 192.168.0.2 port 5001 connected with 192.168.0.10 port 40531 [ 4] 0.0-12.7 sec 3.94 MBytes 2.61 Mbits/sec [ 4] local 192.168.0.2 port 5001 connected with 192.168.0.10 port 40536 [ 4] 0.0-12.3 sec 3.99 MBytes 2.73 Mbits/sec [ 4] local 192.168.0.2 port 5001 connected with 192.168.0.10 port 40538 [ 4] 0.0-11.4 sec 3.65 MBytes 2.68 Mbits/sec [ 4] local 192.168.0.2 port 5001 connected with 192.168.0.10 port 40540 [ 4] 0.0-19.5 sec 4.01 MBytes 1.72 Mbits/sec [ 4] local 192.168.0.2 port 5001 connected with 192.168.0.10 port 40541 [ 4] 0.0-11.3 sec 3.67 MBytes 2.71 Mbits/sec [ 4] local 192.168.0.2 port 5001 connected with 192.168.0.10 port 40545 [ 4] 0.0-11.5 sec 3.82 MBytes 2.78 Mbits/sec [ 4] local 192.168.0.2 port 5001 connected with 192.168.0.10 port 37802 [ 4] 0.0-10.2 sec 888 KBytes 715 Kbits/sec [ 4] local 192.168.0.2 port 5001 connected with 192.168.0.10 port 37812 [ 4] 0.0-12.4 sec 1.59 MBytes 1.08 Mbits/sec [ 4] local 192.168.0.2 port 5001 connected with 192.168.0.10 port 37814 [ 4] 0.0-11.9 sec 3.88 MBytes 2.74 Mbits/sec |

So we have an average speed of 2.2 Mbits/sec, not much but we are interested in comparing results so speed is not an issue in this test.

First test

I’ll copy from laptop1 to laptop2 the directory /var/lib/dpkg containing 7105 files that take up 61 MB.Because rsync will compare changes between source and destination, I made sure to nuke the directory off the server after every run.

This is the script i’ve used to do the tests and record the results:

#!/bin/bash for i in `seq 1 10`; do time scp -qrp /var/lib/dpkg 192.168.0.2:/test/copy/; ssh 192.168.0.2 rm -fr /test/copy/dpkg; done echo "End scp" for i in `seq 1 10`; do time rsync -ae ssh /var/lib/dpkg 192.168.0.2:/test/copy/; ssh 192.168.0.2 rm -fr /test/copy/dpkg; done echo "End rsync" for i in `seq 1 10`; do time tar -cf - /var/lib/dpkg |ssh 192.168.0.2 tar -C /test/copy/ -xf - ; ssh 192.168.0.2 "rm -fr /test/copy/*"; done |

For netcat i’ve used:

On the receiving end (laptop2):

# nc -l -p 7000 | tar x |

And on the sending end (laptop1):

# cd /var/lib/dpkg # time tar cf - * | nc 192.168.0.2 7000 |

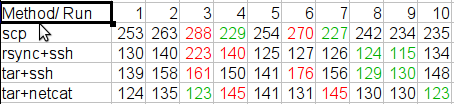

These are the results i’ve got (in seconds)

For every method i’ve dropped the 2 best and the 2 worst results and calculated the average of the remaining 6:

scp: 246 seconds

rsync+ssh: 130 seconds

tar+ssh: 149 seconds

tar+netcat: 131 seconds

So with my computer (and my network) the best way to transfer files appears to be a rsync with ssh.

Second test

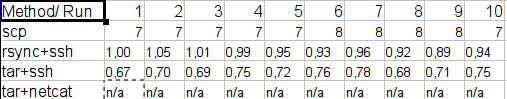

Not satisfied with the results I ran exactly the same tests on two servers connected via gigabit ethernet.

For some errors i have not been able to measure the time of netcat, it worked properly and but did not come out so I could not take the times (which were still very low).

Rsync+ssh and tar+ssh completed all the work correctly in less than a second so I’ve included the times to the hundredth of a second.

Conclusion

Honestly I expected a ranking like this: netcat > tar+ssh > rsync+ssh > scp, and I was promptly refuted by the evidence on my PC.

Similar tests made on another blog have been very similar to what I expected, so my conclusions are:

1) Never use scp, it is the worst method you can use

2) Depending on the network and computers you have available you will have different results with rsync and tar.

3) On fast networks tar + ssh seems to be slightly more performing, I expect that netcat that has encryption off (comparated to ssh) can give even better results.

4) Rsync can work in an incremental way so it has very strong advantages over other methods in terms of usability if you know at the start that the operation should be performed several times (for example do more sync in the same day between two sites).

Popular Posts:

- None Found

I did some similar tests with large datasets (300 GB +) and found tar over ssh the be the most reliable for the speed. One thing I did notice, adding options to the ssh command can really have an impact on performance.

If you are doing file transfers on a local lan and are not too concerned about full encryption. You may want to add: -c arcfour

Host Key Checking causes considerable pauses in establishing connections. Once again if you are on a lan, you may want to add: -o StrictHostKeyChecking=no

And one other note, if you do publickey authentication adding: o PreferredAuthentications=publickey can also cut down on the time it takes to establish a connection.

For example:

tar -cpf – * | ssh -2 -4 -c arcfour -o StrictHostKeyChecking=no -o PreferredAuthentications=publickey -lroot ${REMOTE_HOST} tar -C ${REMOTE_DIRECTORY}/ -xpf -”

Thanks for the interesting read….

Chad

Thanks for doing this test. Debian and peers steered me to tar, sftp and finally rsync. The man page for rsync in Debian is excellent and contains many common case examples. Thanks to your test, I now know that my prefered method, rsync, is also one of the fastest.

I like rsync to much that I’ve started using it as an improved copy for local files. Grsync is a nice GUI front end that works well with frequent but irregularly timed syncs because it stores “sessions”.

I’m not sure of the syntax you are using for “rsync + ssh.” The manual advises:

rsync -avz source:/local_files/ target:/remote_files

I was under the impression that a single colon invoked ssh by default. “man rsh” brings up the ssh man page. Compression, the -z, might dog slow cpus, but it might be faster than your 802.11B.

Another advantage to rsync is easy resume capability. I’m not sure how the other methods would handle a break in commuication.

Not all that surprising.

SSH really isn’t all that CPU-consuming. Sure, it’s encryption, but symmetric encryption (which is used after the initial authentication) can be quite fast even in software. For instance, try “dd if=/dev/zero bs=1048576 | openssl enc -blowfish -k somepass > /dev/null”. On my machine, it performs at 91,2 MB/s, almost enough to fully saturate a 1GB-line with payload, and faster than many disks even in sequential read.

Regarding rsync vs. tar, rsync is optimized for efficient network-usage, not efficient disk-usage. In your particular case, it sounds like many small files was the challenge. While rsync is usually the winner in partial updates, it has a strong disadvantage in the first run, since it scans the directory on both machines first to determine which files to transfer.

Also, if network is the bottleneck and not CPU, you can optimize your tar-example with compression, for instance bzip2 or xz/lzma. My /var/lib/dpkg went from 90MB to 13 using bzip2, with a throughput of 6.1mbit, so adding a -j to your tar-line it might improve your low-bandwidth-example even more than rsync if you want to prove a point.

Also, rsync uses compression by default, so disabling it /might/ improve performance in the high-bandwidth-case.

Unfortunately currently fast chipers for ssh are unavailable, then for fast network and fast hard drives, ssh is much slower than raw tcp transfering.

Is it possible running rsync by netcat transport ?